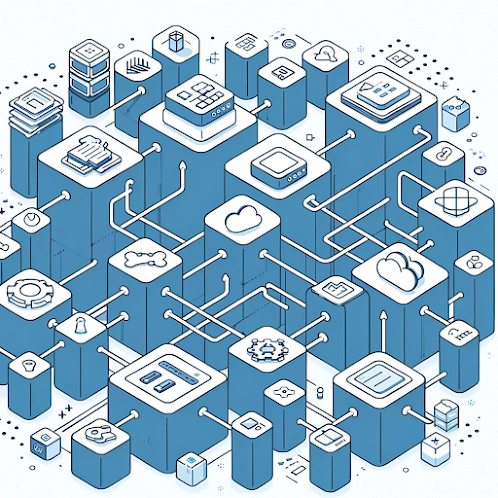

After grasping the core concepts of microservices architecture in our initial discussion, we now turn our attention to the pivotal aspects of deploying and securing these distributed systems. As the microservices approach gains traction, its deployment strategies and security measures become paramount for the success of any organization looking to leverage its full potential.

Deployment Strategies: Virtual Machines and the Cloud

Deployment in a microservices environment can often be a complex endeavor due to the distributed nature of the services. Traditional physical machines are generally eschewed due to poor resource utilization and the violation of microservices principles like autonomy and resilience. Instead, virtual machines (VMs) have become a popular choice, offering better resource utilization and supporting the infrastructure as code (IaC) practices. VMs allow each service instance to be isolated, promoting the design principles of microservices, and are bolstered by the use of special operating systems designed for VM management.

The cloud, however, offers even greater flexibility. Services like Amazon EC2 (IaaS) provide virtualized servers on demand, while AWS Lambda (FaaS) runs code in response to events without provisioning servers, perfect for intermittent tasks like processing image uploads. Azure App Service (PaaS), on the other hand, allows developers to focus on the application while Microsoft manages the infrastructure, suitable for continuous deployment and agile development.

Security: A Multifaceted Approach

Security within microservices must be comprehensive, addressing concerns from network communication to service authentication. HTTPS is used ubiquitously, ensuring that data in transit is encrypted. At the API gateway or BFF API level, rate limiting is crucial to prevent abuse and overloading of services. Moreover, identity management through reputable providers adhering to OAuth2 and OpenID Connect standards ensures that only authenticated and authorized users can access the services. This multifaceted approach ensures that security is not an afterthought but integrated into every layer of the microservices stack.

Central Logging and Monitoring: The Eyes and Ears

Centralized logging solutions like Elastic/Kibana, Splunk, and Graphite provide a window into the system, allowing for real-time data analysis and historical data review, which are essential for both proactive management and post-issue analysis. Similarly, centralized monitoring tools like Nagios, PRTG, and New Relic offer real-time metrics and alerting capabilities, ensuring that any issues are promptly identified and addressed.

Automation: The Key to Efficiency

Automation in microservices is about creating a self-sustaining ecosystem. Source control systems like Git serve as the foundational layer, where code changes are tracked and managed. Upon a new commit, continuous integration tools like Jenkins automatically build and test the application, ensuring that new code does not introduce bugs.

Then comes continuous delivery, where tools like Jenkins or GitLab CI automatically deploy the application to a staging environment, replicating the production environment. Finally, continuous deployment takes this a step further by promoting code to production after passing all tests, achieving the DevOps dream of seamless delivery. For instance, a new feature in a social media app can go from code commit to live on the platform within minutes, without manual intervention.

In Conclusion

The deployment and security of microservices are complex but manageable with the right strategies and tools. By leveraging virtual machines, cloud services, comprehensive security practices, centralized logging and monitoring, and embracing automation, organizations can deploy resilient, secure, and efficient microservices architectures. This approach not only ensures operational stability but also positions companies to take full advantage of the agility and scalability that microservices offer.